Meta’s report on the 2024 elections highlights limited AI impact on disinformation due to strong moderation measures. Despite fears, AI’s role in electoral misinformation was modest, with rapid removal of harmful content. Concerns regarding censorship and biases persist among lawmakers, reflecting ongoing debates about technology in politics.

In 2024, Meta has reported that artificial intelligence (AI) exerted only a modest influence on global elections, contradicting widespread concerns about its potential impact. The company’s global affairs president, Nick Clegg, noted that Meta’s stringent measures successfully curtailed the spread of AI-driven misinformation across its social media platforms, including Facebook, Instagram, and Threads. Despite the heightened potential for disinformation during a pivotal election year, these strategies have largely helped maintain the integrity of the electoral discourse.

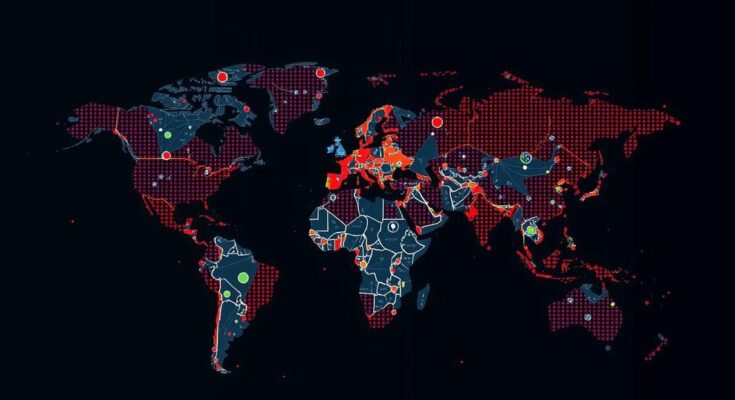

Specifically, Meta operated multiple election oversight centers worldwide and successfully disrupted numerous covert influence operations attributed primarily to Russia, Iran, and China. Clegg emphasized that the use of generative AI was ineffective for those aiming to engage in disinformation campaigns, stating, “I don’t think the use of generative AI was a particularly effective tool for them to evade our trip wires.” Furthermore, the content generated through AI, such as deepfakes, was promptly labeled or removed.

Clegg’s observations were corroborated by a Pew Research survey revealing that a significant portion of the American public held skepticism regarding the role of AI in elections, with many believing it would be used predominantly for nefarious purposes. Although there were cases of AI-generated misinformation, Clegg affirmed that their impact was not as destructive as previously anticipated.

The discourse surrounding AI’s influence on elections was underscored by an op-ed from Harvard academics stating that, “there was AI-created misinformation and propaganda, even though it was not as catastrophic as feared,” showcasing the moderating effect of Meta’s interventions.

Despite the successful defense against misinformation, concerns over potential censorship and biases in content moderation continue to linger, particularly among Republican lawmakers who have criticized Meta’s handling of certain viewpoints. Clegg acknowledged the delicate balance the company must maintain to ensure both the protection of transparent electoral processes and the preservation of free speech.

The concern over AI’s role in manipulating electoral outcomes has been a central topic of discussion in the context of the 2024 global elections. With an estimated 2 billion voters participating across various countries, the implications of AI on public opinion and electoral integrity were considered significant. The surge in AI capabilities, particularly in generating misleading content, prompted major tech firms like Meta to adopt comprehensive measures to monitor and regulate the spread of misinformation on their platforms. Amid ongoing geopolitical tensions, the stakes associated with maintaining platform integrity and trustworthiness have never been higher, making Meta’s report particularly relevant.

In summary, while the potential for AI-induced misinformation in the electoral landscape of 2024 existed, Meta’s interventions significantly mitigated its impact. The company’s robust monitoring and content moderation strategies proved effective in countering attempts at disinformation, particularly from state actors. Nevertheless, skepticism remains among the public regarding the future of AI in elections, and challenges of censorship and content bias continue to pose relevant questions in the ongoing dialogue regarding the role of technology in democratic processes.

Original Source: www.aljazeera.com